I'm very doubtful that the current trajectory of AI will make our lives easier. Indeed, the impressive progress of AI has led me to reflect on the fact that despite huge technological advances over the last 50 years, the lives of the majority of people have got harder and more uncertain. If I compare my own career with that of my dad, he was able to jog-along with a job he didn't much like, but basically survived without too much threat, and retired at 58 with a very generous pension. My journey, by comparison, has been a rollercoaster (indeed, a rollercoaster with some bits of the track missing!) and I am seeing people (particularly young academics) in their 30s faring even less well. So what's going on? And - before I delve into that further - it's too easy and lazy simply to blame "capitalism": we need to be more precise.

I suspect the common denominator in the work equation is technological advancement alongside rigid institutional structures. This is not to denigrate technology - it is amazing - but it is to ask deeper questions about our institutions. I think there is a systems explanation for what is happening.

When a new technology arrives in a social system (a society, a business, an institution) it increases the possibilities for surprise in that system. Quite simply, new things become possible which people haven't seen before. Since information entropy is a measure of surprise, we can say that the "maximum entropy" of the social system increases, where this is the maximum is what is possible - not necessarily what is observed.

What is observed in a social system with a new technology is a degree of surprise (some degree of innovation is observable), but nowhere near the maximum amount of possible surprise. So observable entropy increases, but the maximum entropy increases more. What does this mean for work and workers?

A bit like voltage in electronics, the difference between the maximum potential and the observed reality creates a space in which activity is stimulated. The bigger the space between observed entropy and maximum entropy, the greater the stimulation for activity. This activity is what we do in work. More precisely, work becomes a process of exploring the many ways in which the possible new configurations of practice and technology can be realised. Some of that work is called "research", other aspects of this work might be called "operations", other aspects of it might be called "management", but whatever kind of activity it is, it increasingly involves the exploration of new options.

This "work space" between the maximum entropy and the observed entropy is, as David Graeber famously said in his "bullshit jobs", mostly pointless. The work is basically doing things that have been, or can be, done in many different ways: it is effectively "redundant". But that's the point - redundancy generation in the space between the maximum entropy and the observed entropy is what must go on in that space. And it is exhausting and dispiriting, particularly if it increases.

This is a bleak outlook because of all recent technologies to increase the maximum entropy, AI is in a league of its own. It will accelerate the growth of maximum entropy beyond anything we have yet seen. So what will happen with the observed entropy and the work in the space between?

The problem is the increasing gap between observed entropy and maximum entropy. What keeps the observed entropy so much lower is the structure of institutions. The deepest risk is that the maximum entropy goes off the scale, and the observed entropy - the visible interface to existing institutions doesn't change very much at all. That will create a pressure-cooker atmosphere within the work system. There will be work, and indeed more of it than ever before, but work will become increasing febrile and pointless. It will make us sick: the mental health problems of workers, students and everyone else will suffer.

It would be better if the redundancy-generating space was maintained as stable rather than increasing. This might be achieved if we consider the drivers for increasing maximum entropy through technology. One of the drivers is noise. It is the noise generated by an existing technology (for example, an AI) which drives the innovation to the next iteration of the technology. If human labour was seen as an effective management of noise, rather than the generation of redundancy, then society might be steered in a way which doesn't cause internal collapse.

Another way of saying this is to say that uncertainty is the variable to manipulate collectively, and only humans can manipulate this variable. One of the problems with increasing maximum entropy is that labour is directed to do tasks that can be clearly defined. We see this with chatGPT at the moment: thousands of academics who say "we can use it to do <insert name of well-defined task>" This is looking for your keys where the light is, not where you lost them.

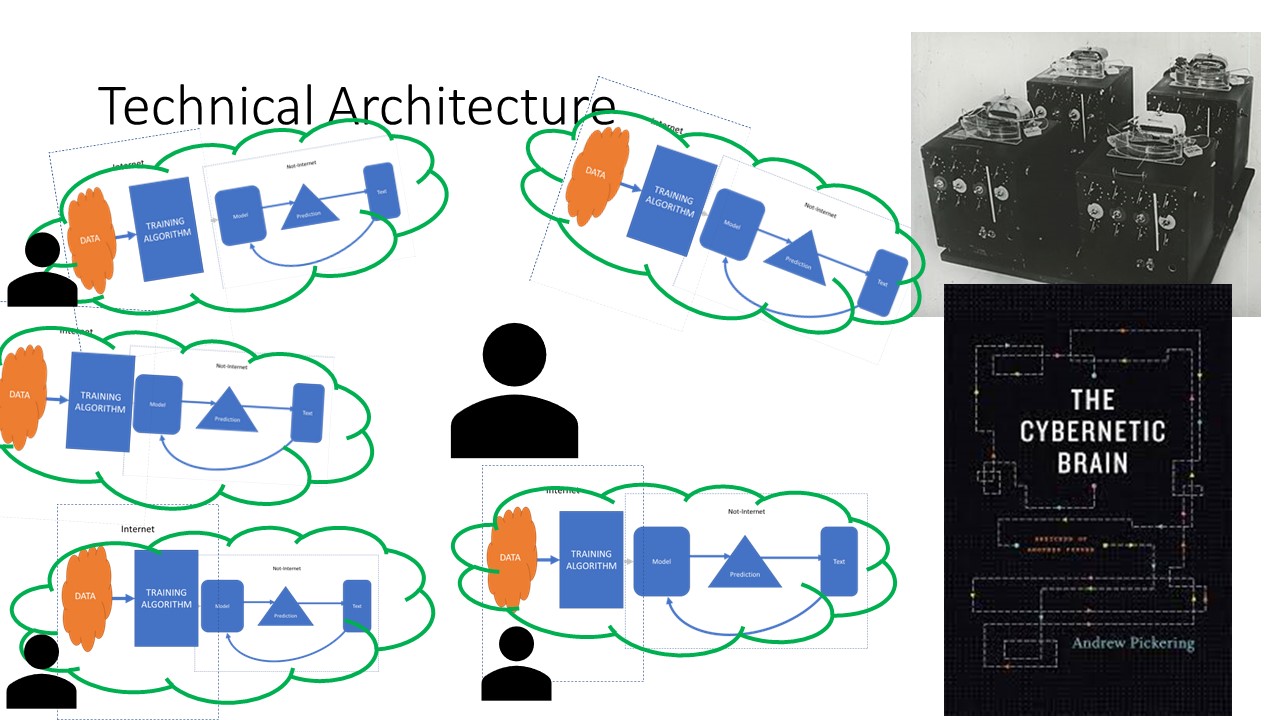

One of the things the technology might be able to do is to direct human labour to where the uncertainty is greatest. Focused in this way, the work is really about exploring differences in understanding between different people of things which nobody is clear about. This is high variety, convivial, high level work for the many. Part of this work is work to explore the possibilities of new technology - the "redundancy work" in the space between observed and maximum entropy. But the other part of the work is to coordinate intellectual effort in exploring the noise of uncertainty, and the result of that work can help manage the gap between maximum entropy and observed entropy.

What does this look like practically? I think, given that uncertainty is experienced physiologically, and exploring uncertainty together is deeply convivial, this looks like work with a focus on wellness, maybe using technology to identify where wellness might be threatened.

Creating a "wellness system" is a possibility. The consequences of not doing this look far more dire than anyone can yet imagine.