I gave a presentation to the leaders of Learning Support at the University of Copenhagen this morning. I will write a paper about this, but in the meantime this is a blogpost to summarise the key points.

I began by saying that I would say nothing about "stopping the students cheating". I said basically, as leaders in learning technology in universities, there is no time to worry about this. The technology is improving so fast, what really matters is to think ahead about how things are going to change, and the strategies that are required to adapt.

I said that basically, we are in "Singin' in the Rain". The movie is a good guide to the tech-rush that's about to unfold.

I also referred to the 2001 Spielberg movie AI, which I didn't understand when I first saw it. I think we will look back on it as a prescient masterpiece.

My own credentials for talking about AI are that I have been involved in an AI diagnostic project in Diabetic Retinopathy for 7 years at the University of Liverpool, and after £1.1m of project funding and then £2m of VC support, this has now been spun-out. When the project started I was an AI sceptic (despite being the co-inventor of the novel approach that has led to it's success!). I'm not sceptical now.

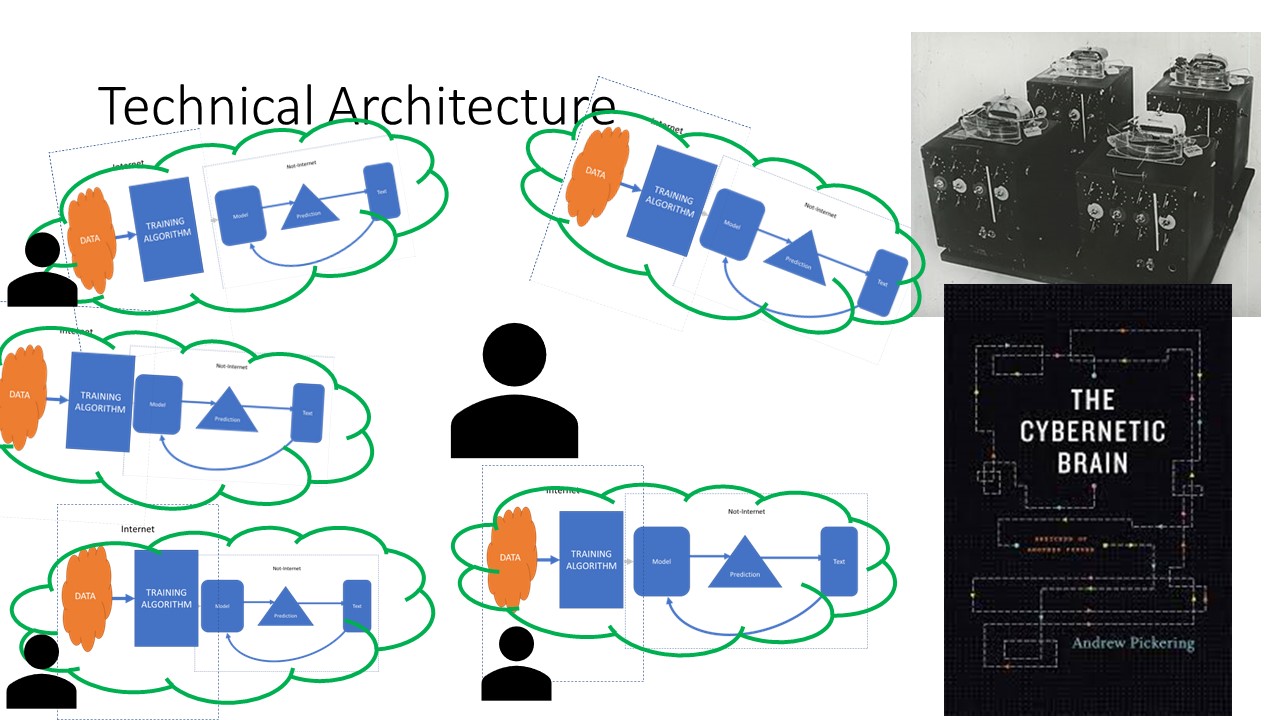

I said that what is really important to understand is how the technology represents a new kind of technical architecture. I represented this with a diagram:

As a term, AI is a silly description. "Artificial Anticipation" is much better. The technology is new. It is not a database; it consists of a document called a model (which is a file) that can be thought of as being like a "sieve". The configuration of the structure of the sieve is produced through a process called "training", which requires lots of data, and lots and lots of time. This process uses huge amounts of data from the internet. Training requires "data redundancy" - lots of representations of the same thing.

Since academics have been busy writing papers which are very similar to each other for the last 30 years, chatGPT has had rich pickings from which it can train itself.

If you want to understand the training process, I recommend looking at google's "teachable machine" (see http://teachablemachine.withgoogle.com). This allows you to not only train a machine learning model (to recognise images or objects), but to download the model file and write your own programs with it. It's designed for children - which is how simple all of this stuff will be quite soon...

Once trained, the "model" does not need to be connected to the internet (chatGPT isn't, despite being accessed online). The model can make predictions about the likely categories of data it hasn't seen before (unlike a database which gives back what was put into it in response to a query). The better the training, the better the predictions.

All predictions are probabilities. In chatGPT, every word is chosen according to the predictions of the chatGPT model, on the basis of the probabilities generated by the model. The basic architecture looks like the diagram above. Notice how the output of the text is fed as input back into the model. Also notice the statistical layer which does something called "autoregression" to refine the selection process from the options presented by the model.

This architecture is where the clues are to how profound the impact of the technology is going to be.

Models are not connected to the internet. That means they can stand alone and do everything that chapGPT does. We can have conversations with a file on our device as if we were on the internet. Spielberg got this spot-on in AI.

Another implication of this is, as I (carefully) pointed out to some Chinese students I gave a presentation to a few months back (at Beijing Normal University), the conversations you have can be entirely private. There need not be any internet traffic. Think about the implications of that.

We are going to see AI models on personal devices doing all kinds of things everywhere.

I made a couple of cybernetic references: one to Ashby's homeostat - because the homeostat's autonomous units coordinated their behaviour with each other in the way that AI's are likely to provide data for other AIs to train themselves. This is likely to be a tipping point. I strongly suggested that people read Andy Pickering's "The Cybernetic Brain".

There's something biological about this architecture. A machine learning model does not change in most machine learning applications: chatGPT's model does not retrain itself: retraining takes huge amounts of resource and time. What happens is that the statistical layer which refines the selection does adapt. Biologically, it's similar to the model being the Genotype (DNA) and the statistical layer being the phenotype (adaptive organism).

This also ties in with AI being seen as an anticipatory system because the academic work on anticipatory systems originally comes from biology: an anticipatory system is a system which contains a model of itself in its environment (Robert Rosen). Loet Leydesdorff, with whom I have worked for nearly 15 years, has developed a model of this (building on Rosen's work) to explain communication in the context of economics, innovation and academic discourse (the Triple Helix). I have found Loet's thinking very powerful to explain this current phase of AI.

Of course, there are limitations to the technology. But some of these - particularly about uncertainty and inspectibility will be overcome I think (some of my own work concerns this)

The education system is our technology for doing this. It's rather crude and introduces all kinds of problems. It combines documents (books, papers, videos, etc) which contain knowledge which requires interpretation and communication by teachers and students in order to fulfil this "cultural transmission" (someone objected to the word "transmission", and I agree it's an awkward shorthand for the complexity of what really happens).

AI is a document which is also a medium of interpretation and communication. It is a new kind of cultural artefact. What kind of education system do we build around this? Do we even need an education system that looks remotely like what we have now?

I said I think this is what we should be thinking about. It's going to come for us much faster than most senior managers in universities can imagine.

So we simply haven't got time to worry about stopping the kids cheating!

No comments:

Post a Comment