When we think about selection - for example, natural selection, or the selection of symbols to create or decode a message (Shannon), or the selection of meanings of that message (Luhmann) - there is an implicit notion that selection is a bit like selecting cereal in the supermarket. There is a variety of cereal, and the capacity to select must equal the degree of variety (this is basically a rephrasing of Ashby's Law of requisite variety). Rather like Herbert Spencer's presentation of natural selection as "survival of the fittest", this concept of selection has a close relation to capitalism - both as it manifested in the 19th century economy, and in the 19th century schoolroom (which must, as Richard Lewontin pointed out, have influenced Darwin).

The problem with this idea is that it is assumed that the options available for choosing are somehow independent from the agents that make the choice, and independent from the processes of choosing. But the idea of independent varieties from which a selection is made is a misapprehension. It leads to confusion when we think about how humans learnt that certain things were poison. It is easily imagined that blackberries and poison ivy might have been on the same "menu" at some point in history as "things that potentially could be consumed", and through the unfortunate choices of some individuals, there was social "learning" and the poison ivy was dropped. But the "menu" evolved with the species - the physical manifestation of "food" was inseparable from practices of feeding which had developed alongside the development of society. The eating of poison ivy was always an eccentricity - a transgression which which would have been felt by anybody committing it before they even touched the ivy. The taste for novelty is always transgressive in this way - the story of Adam and Eve and the apple carries some truth in conveying this transgressive behaviour.

This should change the way we think about 'selection' and 'variety'. It may be a mistake, for example, to think of the environment having high variety, and individual humans having to attenuate it (as in Beer's Viable System Model). At one level, this is a correct description, yet it overlooks the fact that the attenuation of the environment is something done by the species over history, not by individuals in the moment. Some variety is only "out there" because of historical transgressions. What produces this variety? - or rather, what produces the need to transgress? Colloquially, our thirst for "variety" comes from boredom. It comes from curiosity about what lies beyond the predictable, or a critique of what becomes the norm. From this perspective, redundancies born out of repetition become indicative of the quest for increased variety. This thirst for novelty born from redundancy is most clearly evident in music.

Music generates redundancies at many levels: melodies, pitches, rhythms, harmonies, tonal movements - they all serve to create a non-equilibrium dynamic. Tonal resolutions affirm redundancies of tonality, whilst sowing the seed for the novelty that then may follow. I remember the wonderful Ian Kemp, when discussing Beethoven string quartets at the Lindsay quartet sessions at Manchester University, would often rhetorically ask at the end of a movement, "Well what is he going to do now?... something different!". The "something different" is not a selection from the menu of what has gone before. It is a complete shift in perspective which breaks with the rules of what was established to this point. The genius is that the perspectival shift then makes the connection to what went before.

Bateson's process of bio-entropy is a description of this. The trick is to hover between structural rigidity and amorphous flexibility. Bateson put the tension as between "rigour" - which at extreme brings "paralytic death", and "imagination" which at extreme brings madness (Mind and Nature, "Time is Out of Joint"). In Robert Ulanowicz's statistical ecology, there is a similar Batesonian balance between rigidity and flexibility, as there is in Krippendorff's lattice of constraints (see http://dailyimprovisation.blogspot.co.uk/2016/06/unfolding-complexity-of-entanglements.html).

I suspect selection is a more complex game than Shannon supposes. Our choices are descriptions. Our transgressions are wild poetic metaphors which only at the deepest level connect us to normal life. In Nigel Howard's metagame theory, such wild metaphors are deeper level metagames that transform the game everyone else is playing into something new. I've struggled to understand where and how these metagames arise. The best I can suggest is that as mutual information rises in communicative exchanges, so the degree of "boredom" rises because messages become predictable. That means that the descriptions of A match the descriptions of B. What this boredom actually is is the increasing realisation of the uselessness of rational selection: this is a moment of Nigel Howard's "breakdown of rationality". Bateson would call it a double-bind. To escape it, at some point A or B will generate a new kind of description unknown in the communication so far. This shakes things up. At a deep level, there will be some connection to what has gone before, but at the surface level, it will be radically different - maybe even disturbing.

In music this unfolds in time. It is the distinction between a 1st and 2nd subject in sonata form, or perhaps at a micro-level, between a fugal subject and its countersubject. A theme or section in the music is coherent because it exists within a set of constraints. Descriptions are made within those constraints. The "transgressive" exploration of something different occurs when the constraints change. This can happen when redundancies in many elements coincide: so, for example, a rhythm is repeated alongside a repeated sequence of notes, or a harmony is reinforced (the classic example is in the climax of the Liebestod in Wagner's Tristan). These redundancies reduce the richness of descriptions which can be produced, highlighting more fundamental constraints, which can then be a seed for the production of a new branch of descriptions. It is, of course, also what happens in orgasm.

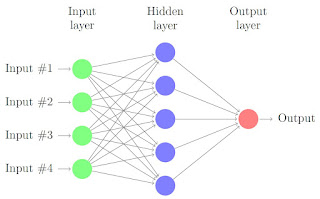

I've been trying to create an agent-based model which behaves a bit like this. It's a challenge which has left me with some fundamental questions about Shannon redundancy. The most important question is whether Shannon redundancy is the same as McCulloch's idea of "Redundancy of potential command" which he saw in neural structures (and in Nelson's navy!) Redundancy of potential command is the number of alternatives I have for performing function x. Shannon redundancy is the number of alternative descriptions I have for x. McCulloch can be easily visualised as alternative pathways through the brain. Shannon redundancy is typically thought about (and measured) as repetition. I think the two concepts are related, but the connection requires us to see that repetition is never really repetition; no two events are the same.

Each repetition which is counted by Shannon, reveals subtle differences in constraint behind the description that a is the same as b. Each repetition brings into focus the structure of constraint. Each overlapping of redundancy (like the rhythm and the melody) brings the most fundamental constraint more into focus. As it is brought more into focus, so new possibilities become more thinkable. It may be like fractal image compression, or discrete wavelet transforms...

The problem with this idea is that it is assumed that the options available for choosing are somehow independent from the agents that make the choice, and independent from the processes of choosing. But the idea of independent varieties from which a selection is made is a misapprehension. It leads to confusion when we think about how humans learnt that certain things were poison. It is easily imagined that blackberries and poison ivy might have been on the same "menu" at some point in history as "things that potentially could be consumed", and through the unfortunate choices of some individuals, there was social "learning" and the poison ivy was dropped. But the "menu" evolved with the species - the physical manifestation of "food" was inseparable from practices of feeding which had developed alongside the development of society. The eating of poison ivy was always an eccentricity - a transgression which which would have been felt by anybody committing it before they even touched the ivy. The taste for novelty is always transgressive in this way - the story of Adam and Eve and the apple carries some truth in conveying this transgressive behaviour.

This should change the way we think about 'selection' and 'variety'. It may be a mistake, for example, to think of the environment having high variety, and individual humans having to attenuate it (as in Beer's Viable System Model). At one level, this is a correct description, yet it overlooks the fact that the attenuation of the environment is something done by the species over history, not by individuals in the moment. Some variety is only "out there" because of historical transgressions. What produces this variety? - or rather, what produces the need to transgress? Colloquially, our thirst for "variety" comes from boredom. It comes from curiosity about what lies beyond the predictable, or a critique of what becomes the norm. From this perspective, redundancies born out of repetition become indicative of the quest for increased variety. This thirst for novelty born from redundancy is most clearly evident in music.

Music generates redundancies at many levels: melodies, pitches, rhythms, harmonies, tonal movements - they all serve to create a non-equilibrium dynamic. Tonal resolutions affirm redundancies of tonality, whilst sowing the seed for the novelty that then may follow. I remember the wonderful Ian Kemp, when discussing Beethoven string quartets at the Lindsay quartet sessions at Manchester University, would often rhetorically ask at the end of a movement, "Well what is he going to do now?... something different!". The "something different" is not a selection from the menu of what has gone before. It is a complete shift in perspective which breaks with the rules of what was established to this point. The genius is that the perspectival shift then makes the connection to what went before.

Bateson's process of bio-entropy is a description of this. The trick is to hover between structural rigidity and amorphous flexibility. Bateson put the tension as between "rigour" - which at extreme brings "paralytic death", and "imagination" which at extreme brings madness (Mind and Nature, "Time is Out of Joint"). In Robert Ulanowicz's statistical ecology, there is a similar Batesonian balance between rigidity and flexibility, as there is in Krippendorff's lattice of constraints (see http://dailyimprovisation.blogspot.co.uk/2016/06/unfolding-complexity-of-entanglements.html).

I suspect selection is a more complex game than Shannon supposes. Our choices are descriptions. Our transgressions are wild poetic metaphors which only at the deepest level connect us to normal life. In Nigel Howard's metagame theory, such wild metaphors are deeper level metagames that transform the game everyone else is playing into something new. I've struggled to understand where and how these metagames arise. The best I can suggest is that as mutual information rises in communicative exchanges, so the degree of "boredom" rises because messages become predictable. That means that the descriptions of A match the descriptions of B. What this boredom actually is is the increasing realisation of the uselessness of rational selection: this is a moment of Nigel Howard's "breakdown of rationality". Bateson would call it a double-bind. To escape it, at some point A or B will generate a new kind of description unknown in the communication so far. This shakes things up. At a deep level, there will be some connection to what has gone before, but at the surface level, it will be radically different - maybe even disturbing.

In music this unfolds in time. It is the distinction between a 1st and 2nd subject in sonata form, or perhaps at a micro-level, between a fugal subject and its countersubject. A theme or section in the music is coherent because it exists within a set of constraints. Descriptions are made within those constraints. The "transgressive" exploration of something different occurs when the constraints change. This can happen when redundancies in many elements coincide: so, for example, a rhythm is repeated alongside a repeated sequence of notes, or a harmony is reinforced (the classic example is in the climax of the Liebestod in Wagner's Tristan). These redundancies reduce the richness of descriptions which can be produced, highlighting more fundamental constraints, which can then be a seed for the production of a new branch of descriptions. It is, of course, also what happens in orgasm.

I've been trying to create an agent-based model which behaves a bit like this. It's a challenge which has left me with some fundamental questions about Shannon redundancy. The most important question is whether Shannon redundancy is the same as McCulloch's idea of "Redundancy of potential command" which he saw in neural structures (and in Nelson's navy!) Redundancy of potential command is the number of alternatives I have for performing function x. Shannon redundancy is the number of alternative descriptions I have for x. McCulloch can be easily visualised as alternative pathways through the brain. Shannon redundancy is typically thought about (and measured) as repetition. I think the two concepts are related, but the connection requires us to see that repetition is never really repetition; no two events are the same.

Each repetition which is counted by Shannon, reveals subtle differences in constraint behind the description that a is the same as b. Each repetition brings into focus the structure of constraint. Each overlapping of redundancy (like the rhythm and the melody) brings the most fundamental constraint more into focus. As it is brought more into focus, so new possibilities become more thinkable. It may be like fractal image compression, or discrete wavelet transforms...