I'm wrestling with the problem of formalising the way in which learning conversations emerge. Basically, I think what happens when a teacher cares about what they do, and reflects carefully about how they act, they will listen carefully to the constraints of a teacher-learner situation. There is rarely (when it is done well) a case where a teacher will insist on "drilling" concepts and give textbook accounts of the concept - particularly when it is obvious that a learner doesn't understand from the start. What they do instead is find out where the learner is: the process really is one of leading out (educare) - they want to understand the constraints bearing upon the learner, and reveal their own constraints of understanding. The process is fundamentally empathic. Unfortunately we don't see this kind of behaviour by teachers very often. The system encourages them to act inhumanely towards learners - which is why I want to formalise something better.

My major line of inquiry has concerned teaching and learning as a political act. Education is about power, and when it is done well, it is about love, generosity and emancipation. The question is how the politics is connected to constraints. This has set me on the path of examining political behaviour through the lens of double binds, metagame theory, dialectics and so on. Particularly with metagame theory, I found myself starting at models of recursion of thought: intractable loops of connections between constraints which would erupt into patterns of apparently irrational behaviour. It looks like a highly complex network.

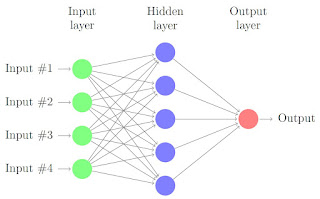

We're used to complex networks in science today. I thought that the complex network of Howard's metagame trees looked like a neural network - and this made me uncomfortable. There is a fundamental difference between a complex network to map processes of reasoning, and a complex network to map processes of cognition. The map of cognition, the neural net, is a model of an abstract cognising subject (a transcendental subject) which we imagine could be a "bit like us". Actually, it isn't - it's a soul-less machine - all wires, connections and an empty chest. That's not at all like us - it would be incapable of political behaviour.

The logical map, or the map of reasoning - which is most elegantly explored in Nigel Howard's work, is not a model of how we cognize, but a model of how we describe. What is the difference? The distinction is about communication and the process of social distinction-making. Models of cognition, like a neural network, are models of asocial cognition - distinction-making within the bounds of an abstract skin in an abstract body. The constraints within which such an "AI" distinction is made are simply the constraints of the algorithm producing it, and (more fundamentally) the ideas of the programmer who programmed it in the first place.

A map of reasoning processes and political behaviour is, by contrast, precisely concerned with constraint - not with the particular distinction made. In conversation with a machine, or a humanoid robot, a teacher would quickly puzzle over the responses of the machine and think "there are some peculiar constraints here which I cannot fathom which make me think this is not a human being". Perhaps, more than anything else, the tell-tale sign would be the failure to act irrationally...

My major line of inquiry has concerned teaching and learning as a political act. Education is about power, and when it is done well, it is about love, generosity and emancipation. The question is how the politics is connected to constraints. This has set me on the path of examining political behaviour through the lens of double binds, metagame theory, dialectics and so on. Particularly with metagame theory, I found myself starting at models of recursion of thought: intractable loops of connections between constraints which would erupt into patterns of apparently irrational behaviour. It looks like a highly complex network.

We're used to complex networks in science today. I thought that the complex network of Howard's metagame trees looked like a neural network - and this made me uncomfortable. There is a fundamental difference between a complex network to map processes of reasoning, and a complex network to map processes of cognition. The map of cognition, the neural net, is a model of an abstract cognising subject (a transcendental subject) which we imagine could be a "bit like us". Actually, it isn't - it's a soul-less machine - all wires, connections and an empty chest. That's not at all like us - it would be incapable of political behaviour.

The logical map, or the map of reasoning - which is most elegantly explored in Nigel Howard's work, is not a model of how we cognize, but a model of how we describe. What is the difference? The distinction is about communication and the process of social distinction-making. Models of cognition, like a neural network, are models of asocial cognition - distinction-making within the bounds of an abstract skin in an abstract body. The constraints within which such an "AI" distinction is made are simply the constraints of the algorithm producing it, and (more fundamentally) the ideas of the programmer who programmed it in the first place.

A map of reasoning processes and political behaviour is, by contrast, precisely concerned with constraint - not with the particular distinction made. In conversation with a machine, or a humanoid robot, a teacher would quickly puzzle over the responses of the machine and think "there are some peculiar constraints here which I cannot fathom which make me think this is not a human being". Perhaps, more than anything else, the tell-tale sign would be the failure to act irrationally...

No comments:

Post a Comment