The act of analysis is ambiguous. On the one hand, analysis is a process of seeking explanations for phenomena. For example, a musical analysis will seek to provide answers to questions about the experience of a piece of music. For those who uphold the techniques of analysis as providing access to some latent truth, the analysis will explain why such and such is the case, or (most commonly) defend value judgements. Schenker's musical analyses, for example, were used by Schenker to make value distinctions between different kinds of music. It may well be the case that Schenker's search for a cosmological explanation drove him to develop his analytical technique in the first place. The search for totalising explanation is largely responsible for music theories from antiquity onwards.

More modestly, analysis provides a description. Schenker's graphs describe music in a particular way. Many other kinds of description are possible, and each focuses attention on one particular aspect or another - Schenker, for example, privileges tonal structure above rhythm. In a world of analytical and critical pluralism, there are many different kinds of description available. Music analysts tend to choose one method or the other, thus buying into a particular ontological stance at the outset, or seek to combine methods and perform some kind of triangulation in their results.

How Learning Analytics relates to this ambiguity of analysis is an important question. Whether Learning Analytics does or does not seek to provide explanations, it is open to be interpreted in this way, making the sometimes spurious correlations which appear in analytic reports (for example, between different pedagogies and learner outcomes) potentially dangerous. When analysing the dynamics of learning analytics, we have to consider the social positioning of an analytic report within the organisational context of education.

Everyone has theories about why things do and don't happen in education. However, only those in positions of power have access to large datasets of everything that happens. Consequently, analytical statements are made by the powerful. These are statements about the behaviour and desired outcomes of those with less power - learners and (often) teachers. Judgements are based on that data which can be captured; data which cannot be captured is ignored. Not only are analytic statements produced by the powerful, they are presented to the even-more-powerful who can exercise their power by changing policies, institutional organisation, staff management, etc. Since educational institutions are immensely confusing, analytic reports have the attraction of summarising outcomes and presenting correlations between interventions and outcomes, which will suggest some kind of management intervention at a high level. Simplistic causal thinking is tempting: "The students have left the course because...". In the face of dismal events, and a powerful statement of correlation between interventions and those events, is it hard to say in the board meeting that "it is only a description".

To take the analytic report as an explanation to be acted on is like making a judgement about Hamlet's state of mind by reading a second hand account of the movie of the play within the play. The power of data analysis is that it presents itself not as a subjective account, but as rational, algorithmically-generated and objective. Yet just as a teacher would direct a learner to read Hamlet, view interpretations, read criticism and so on, so a manager should explore analytics as descriptions, compare different descriptions, ask questions about the institution, about the learners, etc. Fundamentally, any analytics should open up a richer conversation, not close it down. It is only by the path of exploring multi-layered descriptions that managers may become experts in their institutions, just as we would wish learners become experts in Hamlet. The problem is that the managerial space for inquiry about the institution is even more restricted than the learner's space for inquiry. But to situate analytics as description can open this up.

It can also open up the space for learner conversation. Recently, I've been experimenting with analytical reports with some medical students. They are on work placement in medical institutions at the moment, equipped with an iPad e-portfolio system. The e-portfolio system was lacking a reporting engine, so there was an opportunity to do something different. Every Friday, the learners are sent by email a PDF summary of their activity. They all receive this at the same time, and consequently, they all talk to each other about what it contains. In the early weeks, it simply kept a count of the skills practiced which were identified in their portfolio submissions:

It was important to keep the students posting during their placements, and so a measure of their frequency of posting was the next addition to the report:

More modestly, analysis provides a description. Schenker's graphs describe music in a particular way. Many other kinds of description are possible, and each focuses attention on one particular aspect or another - Schenker, for example, privileges tonal structure above rhythm. In a world of analytical and critical pluralism, there are many different kinds of description available. Music analysts tend to choose one method or the other, thus buying into a particular ontological stance at the outset, or seek to combine methods and perform some kind of triangulation in their results.

How Learning Analytics relates to this ambiguity of analysis is an important question. Whether Learning Analytics does or does not seek to provide explanations, it is open to be interpreted in this way, making the sometimes spurious correlations which appear in analytic reports (for example, between different pedagogies and learner outcomes) potentially dangerous. When analysing the dynamics of learning analytics, we have to consider the social positioning of an analytic report within the organisational context of education.

Everyone has theories about why things do and don't happen in education. However, only those in positions of power have access to large datasets of everything that happens. Consequently, analytical statements are made by the powerful. These are statements about the behaviour and desired outcomes of those with less power - learners and (often) teachers. Judgements are based on that data which can be captured; data which cannot be captured is ignored. Not only are analytic statements produced by the powerful, they are presented to the even-more-powerful who can exercise their power by changing policies, institutional organisation, staff management, etc. Since educational institutions are immensely confusing, analytic reports have the attraction of summarising outcomes and presenting correlations between interventions and outcomes, which will suggest some kind of management intervention at a high level. Simplistic causal thinking is tempting: "The students have left the course because...". In the face of dismal events, and a powerful statement of correlation between interventions and those events, is it hard to say in the board meeting that "it is only a description".

To take the analytic report as an explanation to be acted on is like making a judgement about Hamlet's state of mind by reading a second hand account of the movie of the play within the play. The power of data analysis is that it presents itself not as a subjective account, but as rational, algorithmically-generated and objective. Yet just as a teacher would direct a learner to read Hamlet, view interpretations, read criticism and so on, so a manager should explore analytics as descriptions, compare different descriptions, ask questions about the institution, about the learners, etc. Fundamentally, any analytics should open up a richer conversation, not close it down. It is only by the path of exploring multi-layered descriptions that managers may become experts in their institutions, just as we would wish learners become experts in Hamlet. The problem is that the managerial space for inquiry about the institution is even more restricted than the learner's space for inquiry. But to situate analytics as description can open this up.

It can also open up the space for learner conversation. Recently, I've been experimenting with analytical reports with some medical students. They are on work placement in medical institutions at the moment, equipped with an iPad e-portfolio system. The e-portfolio system was lacking a reporting engine, so there was an opportunity to do something different. Every Friday, the learners are sent by email a PDF summary of their activity. They all receive this at the same time, and consequently, they all talk to each other about what it contains. In the early weeks, it simply kept a count of the skills practiced which were identified in their portfolio submissions:

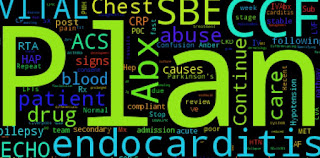

After a couple of weeks, it was possible to add a word cloud to this, and encourage the students to reflect on what they were seeing most frequently.

What's interesting about this is that after each report, there is a spike in the behaviour of the students as they react to the different descriptions of the data they are presented with. Since everyone knows that everyone else has also got their reports at the same time, it is inevitable that they will make comparisons between each other: the timing of the reporting and the fact that it is pushed to the students, rather than requiring them to log-in to a dashboard (which many will not do) seems to be significant.

Most broadly, it appears that the report and its contents act as a constraint which directs the students into conversation and action in much the same way as presenting them with new learning content, or a learning activity might do. Analytics as description is pedagogical. The possibilities for extending this and producing new kinds of description of user action can open out into all sorts of things... But it is not just the contents of the analytical report which matters; the use of time as a constraint also matters - doing things at the same time - particularly with a distributed group - is powerful.

I'm thinking about the implications of this. If analytics is treated as description rather than explanation, then the emphasis on its use becomes pedagogical, not directorial: it is about stimulating conversation. In our teaching practice online, universities tend to remain committed to producing content to stimulate discussion, and it often doesn't work. Wouldn't it be better if they stimulated conversation with imaginative analytic descriptions of activity so far? Could that steer us away from the pathological content-production cycle most e-learning units are obsessed by? I'd like to explore this.

More important are the implications for management. If management sees learning analytics as descriptive rather than explanatory, then it too is a prompt for management discussion. It should support the learning processes of managers as they seek to become experts in the life of the institution. It ought to drive them to inquire more deeply about life on the ground. I think if we could make this really happen, we would have much healthier institutions.

No comments:

Post a Comment