I had a great visit to Cambridge to see Steve Watson the other day - an opportunity to talk about cybernetics and education, machine learning, and possible projects. He also shared with me a great new book on cybernetics and communication about which I will write later - it looks brilliant: https://www.aup.nl/en/book/9789089647702/sacred-channels

One thing came up in conversation that resonated with me very strongly. It was about empirically exploring the moment-to-moment experience of education - the dynamics of the learning conversation, or of media engagement, in the flow of time. What's the best thing we can do? Well, probably ethnography. And yet, there's something which makes me feel a bit deflated by this answer. While there's some great ethnographic accounts out there, it all becomes very wordy: that momentary flow of experience which is beyond words becomes pages of (sometimes) elegant description. I've been asking myself if we can do better: to take experiences that are beyond words, and to re-represent them in other ways which allow for a meta-discussion, but which also are beyond words in a certain sense.

Of course, artists do this. But then we are left with the same problem as people try to describe what the artist does - in pages of elegant description!

This is partly why Alfred Schutz's work on musical communication really interests me. Schutz wanted to understand the essence of music as communication. In the process, he wanted to understand something about communication itself as being "beyond words". Schutz's descriptions are also a bit wordy, but there are some core concepts: "tuning-in to one another", "a spectrum of vividness of sense impressions", and most interestingly, "polythetic" experience. Polythetic is an interesting word - which has led me to think that polythetic analysis is something we could do more with.

If you google "polythetic analysis", you get an approach to data clustering where things are grouped without having any core classifiers which separate one group from another. This is done over an entire dataset. Schutz's use of polythetic is slightly different, because he is interested in the relations of events over time, where there is never any core classifier which connects one event to another, and yet they belong together because subsequent events are shaped by former events. I suppose if I want to distinguish Schutz from the more conventional use of polythetic, then it might be called "temporal polythetic" analysis.

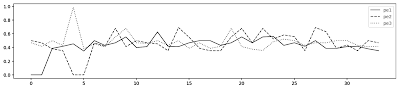

While there are no core classifiers which distinguish events as belonging to one another, there is a kind of "dance" or "counterpoint" between variables. Schutz is interested in this dance. I've been working on a paper where the dance is analysed as a set of fluctuations in entropy of different variables. When we look at the fluctuations, patterns can be generated, much like the patterns below (which are from a Bach 3-part invention). The interesting question is whether one person's pattern becomes tuned-in to another person's. If it is possible to compare the patterns of different individuals over time then it is possible to have a meta-conversation about what might be going, to compare different experiences and different situations. In this way, a polythetic comparison of online experience versus face-to-face might be possible, for example, or a comparison of watching different videos.

Imagine if we analysed data from a conversation: everything can have an entropy over time - the words used, the pitch of the voice, the rhythm of words, the emphasis of words, and so on. Or imagine we examine educational media, we can examine the use of camera shots, or slides changing, or words on the screen, and spoken words. Our experience of education and media is all contrapuntal in this way.

Polythetic analysis presents a way in which the counterpoint might be represented and compared in a way that acts as a kind of "imprint" of meaning-making. While ethnography tries to articulate the meaning (often using more words than was in the initial situation), analysing the imprint of the meaning may enable us to create representations of the dynamic process, to make richer and more powerful comparisons between different kinds of experience.

One thing came up in conversation that resonated with me very strongly. It was about empirically exploring the moment-to-moment experience of education - the dynamics of the learning conversation, or of media engagement, in the flow of time. What's the best thing we can do? Well, probably ethnography. And yet, there's something which makes me feel a bit deflated by this answer. While there's some great ethnographic accounts out there, it all becomes very wordy: that momentary flow of experience which is beyond words becomes pages of (sometimes) elegant description. I've been asking myself if we can do better: to take experiences that are beyond words, and to re-represent them in other ways which allow for a meta-discussion, but which also are beyond words in a certain sense.

Of course, artists do this. But then we are left with the same problem as people try to describe what the artist does - in pages of elegant description!

This is partly why Alfred Schutz's work on musical communication really interests me. Schutz wanted to understand the essence of music as communication. In the process, he wanted to understand something about communication itself as being "beyond words". Schutz's descriptions are also a bit wordy, but there are some core concepts: "tuning-in to one another", "a spectrum of vividness of sense impressions", and most interestingly, "polythetic" experience. Polythetic is an interesting word - which has led me to think that polythetic analysis is something we could do more with.

If you google "polythetic analysis", you get an approach to data clustering where things are grouped without having any core classifiers which separate one group from another. This is done over an entire dataset. Schutz's use of polythetic is slightly different, because he is interested in the relations of events over time, where there is never any core classifier which connects one event to another, and yet they belong together because subsequent events are shaped by former events. I suppose if I want to distinguish Schutz from the more conventional use of polythetic, then it might be called "temporal polythetic" analysis.

While there are no core classifiers which distinguish events as belonging to one another, there is a kind of "dance" or "counterpoint" between variables. Schutz is interested in this dance. I've been working on a paper where the dance is analysed as a set of fluctuations in entropy of different variables. When we look at the fluctuations, patterns can be generated, much like the patterns below (which are from a Bach 3-part invention). The interesting question is whether one person's pattern becomes tuned-in to another person's. If it is possible to compare the patterns of different individuals over time then it is possible to have a meta-conversation about what might be going, to compare different experiences and different situations. In this way, a polythetic comparison of online experience versus face-to-face might be possible, for example, or a comparison of watching different videos.

So in communication, or conversation, there are multiple events which occur over time: Schutz's "spectrum of vividness" of sense impressions. As these events occur, and simultaneously to them, there is a reflective process whereby a model which anticipates future events is constructed. This model might be a bit like the fractal-like pattern shown above. In addition to this level of reflection, there is a further process whereby there are many possible models, many possible fractals, that might be constructed: a higher level process requires that the most appropriate model, or the best fit, is selected.

Overall this means that Schutz's tuning-in process might be represented graphically in this way:

This diagram labels the "flow of experience" as "Shannon redundancy" - the repetitive nature of experience, the reflexive modelling process as "incursive", and the selection between possible models as "hyperincursive" (this is following the work on anticipatory systems by Daniel Dubois).

Imagine if we analysed data from a conversation: everything can have an entropy over time - the words used, the pitch of the voice, the rhythm of words, the emphasis of words, and so on. Or imagine we examine educational media, we can examine the use of camera shots, or slides changing, or words on the screen, and spoken words. Our experience of education and media is all contrapuntal in this way.

Polythetic analysis presents a way in which the counterpoint might be represented and compared in a way that acts as a kind of "imprint" of meaning-making. While ethnography tries to articulate the meaning (often using more words than was in the initial situation), analysing the imprint of the meaning may enable us to create representations of the dynamic process, to make richer and more powerful comparisons between different kinds of experience.