These are notes for a paper on music. It is not meant to be in any way coherent yet!

1 Introduction

Over the centuries that music has been considered as a scientific phenomenon, focus has tended to fall on music’s relation to number and proportion which has been the subject of scholarly debate since antiquity. In scientific texts on music from the Pythagoras to the enlightenment, focus has tended to fall on those aspects of sound which are synchronic, of which the most significant is harmony and the proportional relation between tones. Emerging scientific awareness of the physical properties of sound led scientists to consider deeper analysis of the universe. This scientific inquiry exhibited feedback with music itself: deeper scientific understanding inspired by music, harmony and proportion led to scientific advance in the manufacture of musical instruments and the consequent emergence of stylistic norms.

Less attention has been placed on music’s diachronic structure, despite the fact that synchronic factors such as timbre and harmony appear strongly related to diachronic unfolding, and that the diachronic dimension of music typifies what Rosen calls, “the reconciling of dynamic opposites is at the heart of the classical style”. In more recent years, the role of music in understanding psychosocial and biological domains has increased. Recent work around the area of “Communicative Musicality” has focused more on the diachronic unfolding alongside its synchronic structure, for it is here that it has been suggested the root of music’s communication of meaning lies, and that this communication is, in the suggestion of Langer, a “picture of emotional life”

Diachronic structures present challenges for analysis, partly because dialectical resolution between temporally separated moments necessarily entails novelty, while the analysis throws up fundamental issues of growth within complexes of constraint. These topics of emerging novelty within interacting constraints has absorbed scientific investigation in biology and ecology in recent years. Our contribution here is to point to the homologous analysis of innovation in discourse, and so we draw on insights into dynamics of meaning-making within systems which have reference as a way of considering the meaning-making within music which has no reference, with the aim of providing insights into both.

The value of music for science is that it focuses attention on the ineffable aspects of knowledge: a reminder of how much remains unknown, how the reductionism of the enlightenment remains limited, and how other scientific perspectives, theories and experiments remain possible. And for those scientists for whom music is a source of great beauty, no contemplation of the mysteries of the universe could not consider the mysteries of music.

2 What do we mean by meaning?

Any analysis of meaning invites the question “what do we mean by meaning?” For an understanding of meaning to be meaningful, that understanding must participate in the very dynamics of meaning communication that it attempts to describe. It may be because of the fundamental problems of trying to identify meaning that focus has tended to shift towards understanding “reference” which is more readily identifiable. There is some consensus among scholars focused on the problem that meaning is not a “thing” but an ongoing process: whether one calls this process “semiosis” (Peirce), or Whitehead’s idea of meaning, or the result of the management of cybernetic “variety” (complexity). The problem of meaning lies in the very attempt to classify it: while reference can be understood as a “codification” or “classification”, meaning appears to drive the oscillating dynamic between classification and process in mental process. So there is a fundamental question: is meaning codifiable?

For Niklas Luhmann, this question was fundamental to his theory of “social systems”. Luhmann believed that social systems operate autopoietically by generating and reproducing communicative structures of transpersonal relations. Through utterances, codes of communication are established which constraint the consciousness of individuals who reproduce and transform those codified structures. Luhmann saw the different social systems of society as self-organising discourses which interact with one another. Thus, the “economic system” is distinct from the “legal system” or the “art system”. Meaning is encoded in the ongoing dynamics of the social system. An individual’s utterance is meaningful because the individual’s participation in a social system leads to coordinated action by virtue of the fact that one has some certainty that others are playing the same game: one is in-tune with the utterances of others, and with the codified social system within which one is operating. Luhmann borrowed a term which Parson’s invented, inspired by Schutz’s work, “double contingency”. All utterances are made within an environment of expectation of the imagined responses of the other (what Parsons calls “Ego” and “Alter”)

The problem with this perspective is that meaning results from a kind of social harmony: meaning ‘conforms’. Fromm, for example, criticizes this attitude as the “cybernetic fallacy”: “I am as others wish me to be”. Yet meaningful things are usually surprising. An accident in the street is meaningful to all who witness it but it outside the normal codified social discourse. Even in ordinary language, one emphasizes what is meaningful by changing the pitch of volume of ones’ voice for emphasis on a particular word, or gesturing to some object as yet unseen by others “look at that!”. Whatever we mean by meaning, the coordination of social intercourse and mutual understanding is one aspect, but novelty or surprise is the critical element for something to be called “meaningful”. It is important to note that one could not have surprise without the establishment of a some kind of ‘background’.

However, there is a further complication. It is not that surprise punctuates a steady background of codified discourse. Codified discourse creates the conditions for novelty. The effects of this have been noted in ecology (Ulanowicz) and biology (Deacon). The establishment of regularity and pattern appears to create the conditions for the generation of novelty in a process which is sometimes characterized as “autocatalytic” (Deacon, Ulanowicz, Kauffman). This, however, is a chicken and egg situation: it is that meaning is established through novelty and surprise against a stable background, or is it the stable background which gives rise to the production of novelty which in turn is deemed to be meaningful.

This dichotomy can be rephrased in terms of information theory. This is not to make a claim for a quantitative index of meaning, but a more modest claim that quantitative techniques can be used to bring greater focus to the fundamental problem of the communication of meaning. Our assertion is that this understanding can be aided through the establishment of codified constraints through techniques of quantitative analysis in order to establish a foundation for a discourse on the meta-analysis of meaningful communication. This claim has underpinned prior work in communication systems and economic innovation, where one of us has analysed the production of novelty as the result of interacting discourses between the scientific community, governments and industries. Novelty, it is argued, results from mutual information between discourses (in other words, similarly codified names or objects of reference), alongside a background of mutual redundancy or pattern. Information theory provides a way of measuring both of these factors. In conceiving of communication in this way, there is a dilemma as to whether it is the pattern of redundancy which is critical to communication or whether it is the codified object of reference. Music, we argue, suggests that it is the latter.

3 Asking profound questions

In considering music from the perspective of information theory, we are challenged to address some fundamental questions:

1. What does one count in music?

2. From where does musical structure emerge?

3. What is the relation between music’s synchronic structure and its diachronic unfolding?

4. Why is musical experience universal?

4 Information and Redundancy in Music: Consideration of an ‘Alberti bass’

Music exhibits a high degree of redundancy. Musical structure relies on regularity, and in all cases of the establishment of pattern and regularity, music presents multiple levels of interacting pattern. For example, an ‘Alberti bass’ establishes regularity, or redundancy, in the continual rhythmic pulse of the notes that form a harmony. It also exhibits regularity in the notes that are used, and the pattern of intervals which are formed through the articulation of those notes. It also exhibits regularity in the harmony which is articulated through these patterns. Its sound spectrum identifies a coherent pattern. Indeed, there is no case of a musical pattern which is not subject to multiple versions of redundant patterning: even if a regular pattern is established on a drum, the pattern is produced in the sound, the silence, the emphasis of particular beats, the acoustic variation from one beat to another, and so on. Multiple levels of overlapping redundancy means that no single musical event is ever exactly the same. This immediately presents a problem for the combinatorics of information theory, which seeks to count occurrences of like-events. No event is ever exactly ‘like’ another because the interactions of the different constraints for each single event overlaps in different ways. The determination of a category of event that can be counted is a human choice. The best that might be claimed is that one event has a ‘family resemblance’ to another.

The Alberti bass, like any accompaniment, sets up an expectation that some variety will occur which sits within the constraints that are established. The critical thing is that the expectation is not for continuation, but for variety. How can this expectation for variety be explained?

In conventional information theoretical analysis, the entropy of something is basically a summation of the probabilities of the occurrences of the variety of different codified events which are deemed to be possible and can be counted. This approach suffers from an inability to account for those events which emerge as novel, and which could not have been foreseen at the outset. In other words, information theory needs to be able to account for an dynamically self-generating alphabet.

Considering the constraint, or the redundancy within which events occur presents a possibility for focusing on the context of existing or potentially new events. But since the calculation of redundancy requires the identification of information together with the estimation of the “maximum redundancy”, this estimation is also restricted in calculating novelty.

However, we have suggested that the root of the counting problem is that nothing is exactly the same as something else. But, some things are more alike than others. If an information theoretical approach can be used to identify the criteria for establishing likeness, or unlikeness, then this might be used as a generative principle for generating the likeness of things which emerge but cannot be seen at the outset. Since novelty emerges against the context of redundancy, and redundancy itself generates novelty, a test of this approach is whether it can predict the conditions for the production of novelty.

In other words, it has to be able to distinguish the dynamics between the Alberti bass which articulates a harmony for 2 seconds, and an Alberti bass which articulates the same harmony for more than 1 minute with no apparent variation (as is the case with some minimalist music).

- How do our swift and ethereal thoughts move our heavy and intricately mobile bodies so our actions obey the spirit of our conscious, speaking Self?

- How do we appreciate mindfulness in one another and share what is in each other’s personal and coherent consciousness when all we may perceive is the touch, the sight, the hearing the taste and smell of one another’s bodies and their moving?

These questions echo the concern of Schutz, who in his seminal paper, “Making Music Together” identifies that music is special because it appears to communicate without reference. Music occupies a privileged position in the information sciences as a phenomenon which makes itself available to multiple descriptions of its function, from notation to the spectral analysis of sound waves. Schutz’s essential insight was the musical meaning-making arose from the tuning-in of one person to another, through the shared experience of time.

This insight has turned out to be prescient. Recent research suggests that that there is a shared psychology or “synrhythmia” and amphoteronomy involved in musical engagement. How then might this phenomenon be considered to be meaningful, in the absence of reference, which in other symbolically codified contexts (e.g. Language), meaning appears to be generated in other ways which can be more explicitly explained through understanding the nature of “signs”.

5 Communicative Musicality and Shannon Entropy

Recent studies on communicative musicality have reinforced Schutz’s view that the meaningfulness of musical communication rests on a shared sense of time. The relation between music and time makes manifest many deep philosophical problems: music unfolds in time, but it also expresses time, where time in music does not move at the same speed: music is capable of making time stand still. If time is considered as a dimension of constraint, alongside all the other manifestations that music presents, then the use of time as a constraint on the analysis of music itself can help unpick the dynamics of those other constraints. While one might distinguish clock-time from psychological time, what might be considered psychological time is in reality the many interacting constraints, while clock-time is an imaginary single description allows us at least to carve up experience in ways that can be codified.

Over time, music displays many changes and produces much novelty. Old patterns give way to new patterns. At the root of all these patterns are some fundamental features which might be counted in the first instance:

1. Pitch

2. Rhythm

3. Interval

4. Harmony

5. Dynamics

6. Timbre

So what is the pattern of the Alberti bass?

If it’s note pattern is C-G-E-G-C-G-E-G, and its rhythm is q-q-q-q-q-q-q-q and its harmony is a C major triad, and intervallic relations are 5, -3, 3, -5, 5, -3, 3, -5, over a period of time, the entropy for each element will be;

1. Pitch: -0.232

2. Rhythm: 1

3. Interval: 0.2342

4. Harmony: 1

5. Dynamics:

The important thing here is that novelty becomes necessary if there is insufficient variation between interacting constraints. If mutual redundancy is high, then it also means that there is very high mutual information.

The phrasing of an Alberti bass is an important feature of its performance. For musicians it is often considered that the Alberti pattern should be played as a melody.

Consider the opening of Mozart’s piano sonata

.

The playing of the C in the right hand creates an entropy of sound. Each sound decays, but the decay of C above the decay of the Alberti bass means that the context of the patterns of the other constraints changes. This means that the first bar can be considered as a combination of constraints.

The surprise comes at the beginning of the second bar. Here the pattern of the constraint of the melody changes because a different harmony is articulated, together with a sharp interval drop (a 6th), an accent, followed by a rhythmic innovation in the ornamental turn. Therefore the characteristic of the surprise is a change in entropy in multiple dimensions at once.

It is not enough to surprise somebody by simply saying “boo!”. One says “boo!” with an increased voice, a change of tone, a body gesture, and so on. The stillness which is previous to this is a manifestation of entropies which are aligned.

The intellectual challenge is to work out a generative principle for a surprise.

6 A generative principle of surprise and mental process

The differentiation between different dimensions of music is an indication of the perceptual capacity expressed through relations between listeners and performers. The articulation and play between different dimensions creates the conditions for communicating conscious process. If entropies are all common across a core set of variables, then there is effectively collapse of the differentiating principles. All becomes one. But the collapse of all into one is a precursor to the establishing of something new.

In music’s unfolding process a system of immediate perception works hand-in-hand with a meta-system of expectations (an anticipatory system). This can be expressed in terms of a system’s relation to its meta-system. Because the metasystem coordinates the system, circumstances can arise where the metasystem becomes fused to the system, so that differences identified by the system become equivalent to different identified by the metasystem. In such a case, the separation between system and metasystem breaks down. The cadence is the most telling example of this.

In a cadence, many aspects of constraint work together all tending towards a silence: harmony, melody, dynamics, rhythm, and so forth.

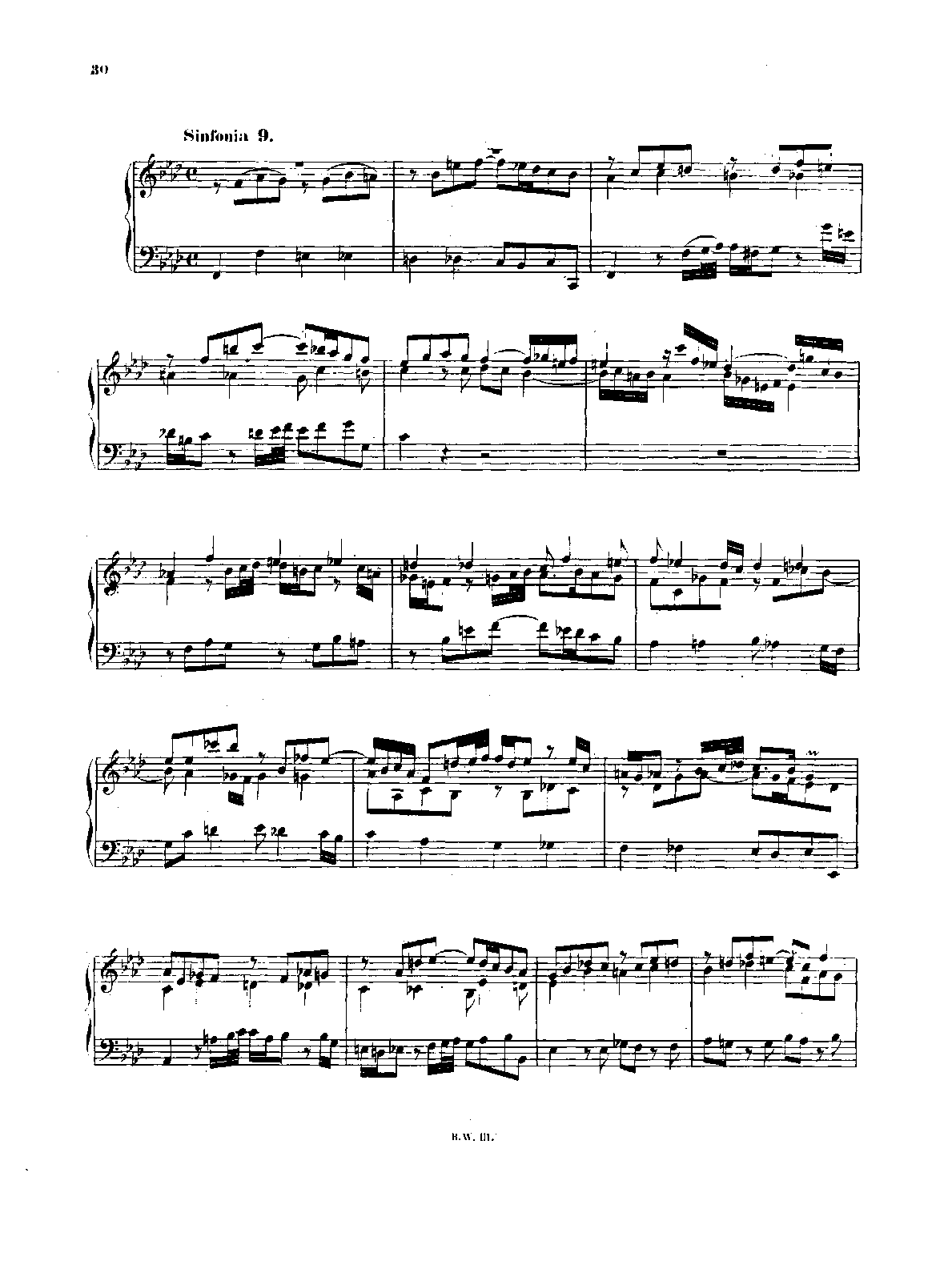

Bach’s music provides a good example of the ways in which a simple harmonic rhythm becomes embellished with levels of counterpoint, which are in effect, ways of increasing entropy in different dimensions. The melody of a Bach chorale has a particular melodic entropy, while the harmony and the bass imply a different entropy. Played “straight”, the music as a sequence of chords isn’t very interesting: the regular pulse of chords creates an expectation of harmonic progress towards the tonal centre, where all the entropies come together at the cadence:

Bach is the master of adding “interest” to this music, whereby he increases entropy in each voice by making their independent rhythms more varied, with patterns established and shared from one voice to another:

By doing this, there is never a complete “break-down” of the system: there is some latent counterpoint between different entropies in the music, meaning that even at an intermediate cadence, the next phrase is necessary to continue to unfold the logic of the conflicting overlapping redundancies.

This is the difference between Baroque music and the music of the classical period. In the classical period, short gestures simply collapse into nothing, for the system to reconstruct itself in a different way. In the Baroque, total collapse is delayed until the final cadence.

The breaking-down of the system creates the conditions for the reconstruction of stratified layers of distinction-making through a process of autocatalysis. Novelty emerges from this process of restratifying a destratified system. The destratified system exhibits a cyclic process whereby different elements create constraints which reinforce each other, much like the dynamics of a hypercycle described in chemistry, or what is described in ecology as autocatalysis.

Novelty arises as a symptom of the autocatalyzing relation between different constraints, where the amplification of pattern identifies new differences which can feed into the restratification of constructs in different levels of the system.

This can be represented cybernetically as a series of transductions at different levels. Shannon entropy of fundamental features can be used as a means of being able to generate higher level features as manifestations of convergence of lower-level features, and consequently the identification of new pattern.

It is not that music resonates with circadian rhythms. Music articulates an evolutionary dynamics which is itself encoded into the evolutionary biohistory of the cell.

7 Musical Novelty and the Problem of Ergodicity

In his introduction to the concept of entropy in information, Shannon discusses the role of transduction between senders and receivers in the communication of a message. A transducer, according to Shannon, encodes a message into signals which are transmitted in a way such that they can be successfully received across a communication medium which may degrade or disrupt the transmission. In order to overcome signal degradation, the sending transducer must add extra bits to the communication – or redundancy – such that the receiver has sufficient leeway in being able to correctly identify the message.

Music differs from this Shannon-type communication because the redundan- cies of its elements are central to the content of music’s communication: music consists primarily of pattern. Moreover, music’s redundancy is apparent both in its diachronic aspects (for example, the regularity of a rhythm or pulse), and in its synchronic aspects (for example, the overtones of a particular sound). Music’s unfolding is an unfolding between its synchronic and diachronic redun- dancy. Shannon entropy is a measure of the capacity of a system to generate variety. Shannon’s equations and his communication diagram re-represent the fundamental cybernetic principle of Ashby’s Law of Requisite Variety, which states that in order for one system to control another, it must have at least equal variety. In order for sender and receiver to communicate, the variety of the sender in transmitting the different symbols of the message must be at least equal to the variety of the receiver which reassembles those symbols into the information content. The two transducers, sender and receiver, communicate because they maintain stable distinctions between each other: information has been transferred from sender to receiver when the receiver is able to predict the message. Shannon describes this emergent predictive capacity in terms of the ‘memory’ within the transducers. It is rather like the ability to recognise a tune on hearing the first couple of notes.

In using Shannon to understand the diachronic aspects of music, we must address one of the weaknesses of Shannon’s equation in that it demands an index of elements which comprise a message (for example, letters in the alphabet). In cases where the index of elements is stable, then the calculation of entropy over a long stretch of communication will approximate to entropy over shorter stretches since the basic ordering of the elements will be the similar. This property is called ergodicity and applies to those cases where the elements of a communication can be clearly identified. Music, however, is not ergodic in its unfolding: its elements which might be indexed in Shannon’s equations are emergent. Music’s unfolding reveals new motifs, themes, moods and dynamics. None of these can be known at the outset of a piece of music.

In order to address this, some basic reconsideration of the relationship between order and disorder in music is required. In Shannon, order is determined by the distribution of symbols within constraints: Shannon information gives an indication of the constraint operating on the distribution. The letters in a language, for example, are constrained by the rules of grammar and spelling. If passages of text are ergodic in their order it is because the constraint operating on it is constant. Non-ergodic

communication like music result from emergent constraints. This means that music generates its own constraint.

In Shannon’s theory, the measure of constraint is redundancy. So to say that music is largely redundant means that music participates in the continual gen- eration of its own constraints. Our technical and empirical question is whether there is a way of characterising the emergent generation of constraint within Shannon’s equations.

To address the problem of emergent features, we need to address the problem of symbol-grounding. Stated most simply, the symbol grounding problem concerns the emergence of complex language from simple origins. Complex symbols and features can only emerge as a result of interactions between simple components. A further complication is that complex structures are neither arbitrary nor are they perfectly symmetrical. Indeed, if the complex structures of music, like the complex structures of nature are to be described in terms of their emergence from simple origins, they are characterized by a “broken symmetry”. In this sense, musical emergence is in line with recent thinking in physics considering the symmetry of the universe, whereby very small fluctuations cause the formation of discrete patterns.

Symmetry-breaking is a principle by which an analysis involving Shannon entropy of simple components may be used to generate more complex structures. In the next section we explain how this principle can be operationalized into a coherent information-theoretical approach to music using Shannon’s idea of Relative Entropy.

8 Relative Entropy and Symmetry-Breaking

As we have stated, music exhibits many dimensions of constraint which overlap. Each constraint dimension interferes with every other. So what happens if the entropy of two dimensions of music change in parallel? This situation can be compared to the joint pairing of meta-system and system which was discussed earlier.

If the constraint of redundancy of the many different descriptions of sound (synchronic and diachronically) constrain each other, then the effect of the re- dundancy of one kind of description in music on another would produce non- equilibrium dynamics that could be constitutive of new forms of pattern, new redundancy and emergent constraint. This effect of one aspect of redundancy on another can be measured with Shannon’s concept of relative entropy.

The effect of multiple constraints operating together in this way is similar to the dynamics of autocatalysis: where effectively one constraint amplifies the conditions for the other constraint. What is to stop this process leading to positive feedback? The new constraint, or new dynamic within the whole system is necessary to maintain the stability of the other constraints.

So what happens in the case of the Alberti bass? The relative entropy of the notes played, the rhythm, intervals, and so on would lead to collapse of distinctions between the components: a new component is necessary to attenuate the dynamic.

This helps explain the changes to the relative entropy in Mozart’s sonata. But does it help to explain minimalism? In the music of Glass, there is variation of timbre, and the gradual off-setting of the accompanimental patterns also helps to give the piece structure.

However, whilst the explanation for relative entropy helps to explain why novelty – new ideas, motifs, harmonies, etc. are necessary, it does not help to explain the forms that they take.

In order to understand this, we have to understand the symmetry-breaking principle is working hand-in-hand with the autocatalytic effects of the overlap of redundancy. Novelty is never arbitrary; it is always in “harmony” with what is always there. What this means is that novelty often takes the form of one change to one dimension, whilst other dimensions remain similar in constitution.

Harmony is another way of describing a redundant description. The melody of Mozart’s piano sonata is redundant in the sense that it reinforces the harmony of the accompaniment. The listener is presented with an alternative description of something that had gone before. The redundancy is both synchronic and diachronic.

From a mathematical perspective, what occurs is a shift in entropies over time between different parts.

The coincidence of entropy relations between parts over a period of time is an indication of the emergence of novelty. Symmetry breaking occurs because the essential way out of the hypercycle is the focus on some small difference from which some new structure might emerge. This might be because the combined entropies of multiple levels throw into relief other aspects of variation in the texture.

This would explain the symmetry-breaking of the addition of new voices, the subtle organic changes in harmony and so forth. However, it does not account for the dialectical features of much classical music. However, what must be noted is that the common pattern before any dialectical moment in music is that there is punctuation or silence or cadence. For example, in the Mozart example, the first four-bar phrase if followed by a more ornamental passage where whilst the harmony remains the same, a semiquaver rhythm takes over in the right hand, and in the process introducing more entropy into the diachronic structure.

Once again, within the dialectical features of music, it is not the case that musical moments are arbitrary. Moreover, it is the case that the choice of dialectical moments in which seem to continually surprise but relate to previous elements. Both in the process of composing music, and in the process of listening to it, what is striking is that the shared experience between composer, performer and listener is of a shared profundity. Where does this profundity come from?

The concept of relative entropy is fundamental to all Shannon’s equations. The basic measure of entropy is relative to a notional concept of maximum entropy. This is explicit in Shannon’s equation for redundancy, where in order to specify constraint producing a particular value of entropy, the scalar value of entropy has to be turned into a vector representing the ratio between entropy and maximum entropy. The measurement of mutual information, which is the index of how much information has been transferred, is a measurement the en- tropy of the sender relative to the entropy of the receiver. The problem is that nobody has a clear idea of what maximum entropy actually is, beyond a general statement (borrowed from Ashby) that it is the measurement of the maximum number of states in a system. In a non-ergodic emergent communication, there is no possibility of being able to establish maximum entropy. Relative entropy necessarily involves studying the change of entropy in one dimension relative to the change of entropy in another. One section of music over a particular time period might exhibit a set of descriptions or variables, each of which can be as- signed an entropy (for example, the rhythm or the distribution of notes played). At a different point in time, the relationship between the entropies of the same variables will be different. If we assume that the cause of this difference is the emergent effects of one constraint over another then it would be possible to plot the shifts in entropy from one point to the next and study their relative effects, producing a graph.

Such an approach is revealing because it highlights where entropies move together and where they move apart. What it doesn’t do is address the non- ergodicity problem of where new categories of description – with new entropies

– are produced, how to identify them, and how to think about the effect that new categories of redundancy have on existing categories. Does the emergence of a new theme override its origins? Does the articulation of a deeper structure override the articulation of the parts which constitute it? However, the analysis can progress from relative entropy to address these questions if we begin by looking at the patterns of relative entropy from an initial set of variables (say, notes, dynamics, rhythm, harmony). If new dimensions are seen to be emergent from particular patterns of inter-relation between these initial dimensions, then a mechanism for the identification of new dimensions can be articulated. In effect, this is a second-order analysis of first-order changes in relative entropy.

A second point is to say that first-order, second-order and n-order dimen- sions of constraint will each interfere with each other over time. The experience of listening to music is a process of shifts between different orders of description. In Schenker’s music analytical graphs, this basic idea is represented as differ- ent layers of description from deep structure, middleground and foreground. A Shannon equivalent is relative entropy, relative entropy of relative entropy, relative entropy of relative entropy of relative entropy. . . and so on.

In the analysis of music (for example, Herbert Brun) and in methods for the construction of musical composition (Xenakis), Shannon entropy has fascinated music scholars. Here we offer a new perspective drawing on the application of Shannon entropy to the analysis of scientific discourse. In this work, we have explored how mu- tual redundancy between different dimensions of communication in a discourse can be used as an index of meaning. In applying this approach to music, we consider the ways in which emergent mutual redundancy and relative entropy between different descriptions of music as it unfolds can identify similar patterns of meaning making in musical communication.

9 Common origins expressed through time

One of the problems of Shannon entropy and the transduction which is essentially expressed by is that, as an aspect of Ashby’s law, it is fundamentally conservative: the Shannon communicative model manages variety. In the same way that the different levels of regulation within Beer’s viable system model work together to articulate coherence of the whole. What Shannon entropy does not do is to articulate a dynamic whereby new things are created. Yet in order to account for music’s continual evolution, and also to account for the shared recognition of profundity, we need an account of the generation of profundity from some common, and simple, origin.

Beer suggested a way of doing this in his later work which articulates his “Platform for change”. Here the process of emergence is fundamentally about the management of uncertainty.

Uncertainty is an important metaphor for understanding music. After all, for any composer, the question is always “what should I do next?” The choices that are faced by a composer are innumerable. In biology, there is evidence that cellular organization also is coordinated around uncertainty (Torday)

Uncertainty is an aspect of variety: it is basically the failure of a meta system to manage the variety of a system: or at least to fail to identify that which needs to be attenuated, or to attend to the appropriate thing. Yet the dialectical truth of music is that identity is always preserved amidst fluctuating degrees of uncertainty. This can be drawn as a diagram whereby whatever category is identified, which might be rhythm, notes, or whatever is counted within Shannon entropy contains within it its own uncertainty of effective identification.

The uncertainty of identification must be managed through the identification of a category, and this process – a metaprocess of categorical identification – interferes with other processes. In effect it codifies particular events in particular values. Yet the values which are codified will be challenged or changed at some point.

The coordination of uncertainty produces a generative dynamic which can under certain circumstances produce a symmetry. But the critical question is why the symmetry which is produced is common. An answer may lie in the origin of the cell.

I’ve got a diagram to show this. The fundamental feature of an improved mechanism to explain the emergent dynamics of musical innovation is that there is a dual transduction process: an inner transduction process which maintains balance within the identity of an entity, and an outer transduction process which maintains the relation between the identity and its environment. It should be noted that it is precisely this mechanism that exists in cellular communication through the production of protein receptors on the cell surface and the interaction between cells on the outside.

10 Communicative Musicality and relative entropy

Schutz’s understanding that musical communication as a shared experience of time now requires some reflection. If music is the shared experience of time, then what can an approach to understanding of entropy contribute to our understanding of time?

The intersubjective experience of music where a shared object of sound provides a common context for the articulation of personal experience and consequently the creating of a ground of being for communication. In this can facilitate the sharing of words, of codified aspects of communication which Luhmann talks about.

The remarkable thing with music is that the symmetry breaking of one individual is remarkably like the symmetry breaking of another individual. The choices for advanced emergent structures do not appear to be arbitrary. This may well be because of the synchronic structure of the materiality of sound. However it may go deeper than this. It may be that the essential physical constitution of the cell carries with it some pre-echoes of the physics of sound, which may at some point be united in fundamental physical mechanisms (probably quantum mechanical).

There is an empirical need to search for the biological roots of music’s contact with biology. The work in communicative musicality provides a starting point for doing this.